UP BROADCAST RUNNING MULTICAST MTU:1400 Metric:1 Sure enough, the 4 VFs came up as completely normal NICs.Ġ9:10.2 Class 0200: 8086:15c5 ~]# ifconfigĮnp9s16f2 Link encap:Ethernet HWaddr 2E:D2:5A:F2:89:8C Only once they are created should the other 4 be booted. It is important to boot the PF VM first, because it is used to create the VFs.

In Lynx MOSA.ic™ I assigned the PF to one VM, and VFs to the other 4. I chose Buildroot Linux for all Guest OSs (GOSs) because Linux includes Intel’s SR-IOV capable ixgbe driver.

I used the LynxSecure® Partitioning System to set up the system with 5 VMs - each with 1 CPU core. This system has a 12 core Intel® Atom® C3858 (Denverton) Processor with 32GB of RAM as well as 4 X550 10 GbE NICs. This week I setup Lynx MOSA.ic ™ on a SuperMicro A2SDi-TP8F board. It is well suited to combining separate boxes running RTOS, embedded Linux and Windows-based operator-consoles into one box saving space, weight and power (SWAP) as well as cost.

Oneboard pci full#

SR-IOV enables efficient I/O Virtualization, so you can provide full network connectivity to your VMs without wasting CPU power. Combined with an embedded hypervisor, this SoC is an excellent consolidation platform. Impressively, each X550 NIC includes 64 VFs, for a total of 256 VFs onboard inside this chip. The Intel Denverton SoC combines up to 16 Atom cores with onboard PCI Express and 4 Intel X550 SR-IOV capable 10 Gigabit Ethernet (GbE) NICs. Collapsing both the physical NIC card and motherboard slot into a highly integrated SoC is perfect for embedded use cases, which tend to be rugged and compact, and where add-in cards and their connectors are a liability. So, what has all this got to do with embedded? The first System on Chip (SoC) with built-in SR-IOV capable NIC was released in 2017. The SR-IOV standard calls the master NIC the Physical Function (PF) and its VM-facing virtual NICs the Virtual Functions (VFs).

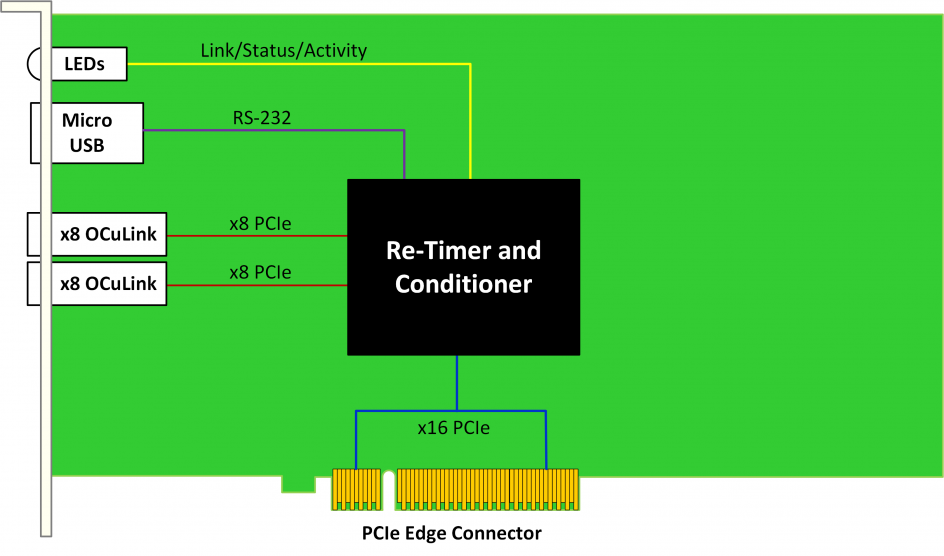

The SR-IOV standard defines how these hardware resources are shared so that hypervisors can find and use them in a common way for all makes and models of SR-IOV capable NICs. Such an I/O-Virtualization-capable-NIC replicates the VM-facing hardware resources like ring buffers, interrupts and Direct Memory Access (DMA) streams. It has one physical ethernet socket, but appears on the PCI Express bus as multiple NICs. A better approach is to build a single NIC that appears as multiple NICs to the software. The hypervisor can share NICs between the VMs using software, but at reduced network speed and with high CPU overhead. Providing network connectivity to VMs on heavily virtualized servers is a challenge. Multi Root I/O Virtualization (MR-IOV) - by contrast - is concerned with sharing PCI Express devices among multiple computers. PCI devices, bridges, and switches are cascaded off the root complex creating a tree structure. It refers to the PCI Express root complex, the core PCI component that connects all PCI devices together. The term Single Root means that SR-IOV device virtualization is possible only within one computer. We can thank the PCI-SIG for the interoperability of the vast range of computer PCI add-in cards and Intel’s famous - in tech circles - 8086 hardware vendor ID that PCI devices report on the bus. The standard was written in 2007 by the PCI-SIG ( Peripheral Component Interconnect - Special Interest Group) with key contributions from Intel, IBM, Hewlett-Packard, and Microsoft (among others). SR-IOV is a hardware standard that allows a PCI Express device – typically a network interface card (NIC) – to present itself as several virtual NICs to a hypervisor. Single Root I/O Virtualization ( SR-IOV) is the complex name for a technology beginning to find its way into embedded devices.

0 kommentar(er)

0 kommentar(er)